The following sections discuss many of the decision points and considerations that will enable you to choose the product that best fits your requirements. For a quick overview of capabilities per product‐oriented solution, see Table 7.1.

TABLE 7.1 Streaming product capabilities

| Feature | Azure Stream Analytics | Azure Databricks | HDInsight with Spark Streaming | HDInsight 3.6 with Storm |

| Temporal / Windowing | Yes | Yes | Yes | Yes |

| Data Format | AVRO, JSON, or CSV | Any | Any | Any |

| Paradigm | Declarative | Both | Both | Imperative |

| Language | SQL, JavaScript | C#/F#, Scala, R, Python, Java | C#/F#, Scala, R, Python, Java | C#, Java |

| Pricing | Stream units | Databricks unit | Cluster hour | Cluster hour |

In addition to the features in Table 7.1, a more detailed description of real‐time vs. near real‐time streaming, interoperability, and scaling are discussed. Each of those factors will have some influence on the products you choose to design and develop your data stream solution with.

Real‐Time and Near Real‐Time

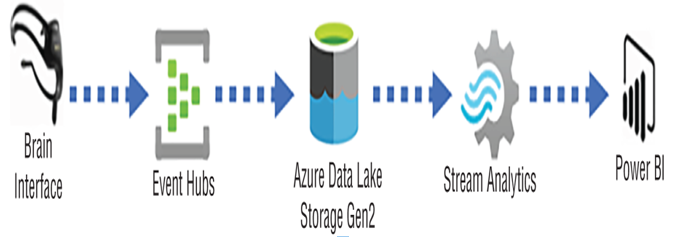

Figure 7.1 illustrates a general architectural data streaming design, in that there is an ingestion, a transformation, and a presentation component. Splitting Figure 7.1 into a more granular solution so that it can distinctly demonstrate the difference between real‐time and near real‐time data streaming processing is warranted. Figure 7.2 illustrates how a real‐time data stream processing solution could look. In a later exercise, Exercise 7.1, you will create a real‐time data streaming solution similar to that shown in Figure 7.2. A solution like this is also often referred to as live streaming.

FIGURE 7.2 Azure real‐time stream processing

The data stream is being generated from an IoT device or an application that is sent directly to the Event Hub namespace. An Azure Stream Analytics job that has that Event Hub namespace configured as an input receives the data stream immediately upon arrival into the event hub. Remember that you provisioned the Event Hub namespace in Exercise 3.16 and provisioned and configured an Azure Stream Analytics job in Exercise 3.17. Finally, the output of the results is streamed, also in real time, to a Power BI workspace for visualization. As shown in Figure 7.3, instead of being streamed directly into the Azure Stream Analytics job, the data can be persisted on an ADLS container.

FIGURE 7.3 Azure near real‐time stream processing

Once the data is stored on the ADLS container, you can decide when to process the data. This near real‐time or on‐demand approach can result in lower costs, as you can decide when to process the data and make the batch sizes smaller, which requires less compute power. If the data stream is not time‐critical and you can wait some minutes or tens of minutes before it becomes available, then this method is much more cost efficient. An Azure Stream Analytics job does support an Azure Blob Storage container as an input stream, in addition to IoT and Event Hubs. Therefore, in this scenario, as shown in Figure 7.3, instead of Event Hubs being configured as the input stream, the Azure Blob Container (i.e., the ADLS container) is configured. Once the data in the blob file in the ADLS container is processed, it becomes visible via a Power BI workspace. Alternatives to using Azure Stream Analytics in a near real‐time scenario are Azure Functions and Azure App Service WebJobs, both of which were introduced in Chapter 1, “Gaining the Azure Data Engineer Associate Certification.” Azure Functions supports subscribing to events that happen on an Azure Blob container, for example, when a file is written to it. Therefore, an Azure function can be used to process the persisted file, the same as Azure Stream Analytics. Azure Functions has no built‐in interface with Power BI, which means you would need to manually build support for that. As shown in Table 7.1, you can use SQL and JavaScript in Azure Stream Analytics. An Azure function supports most programming languages; therefore, most solutions you design can be achieved with custom code. An Azure App Service WebJob can be viewed as a batch job–like product, but it offers two additional possibilities. The first one is that, like an Azure function, you can subscribe to an Azure storage account to get notified when a file arrives at a specific location and storage container. Once notified, you can perform your analysis of it. You get additional programming languages too, like Azure Functions, but an Azure App Service WebJob is not a serverless product; it is instead PaaS. This means you can select a dedicated amount of compute power to execute your processing that is always online and ready to process. Additionally, you can run other App Service Web Apps on the same host to share compute power and reduce costs. Table 7.2 provides a structured view of Azure Functions and Azure App Service WebJobs.

TABLE 7.2 Additional streaming product capabilities

| Feature | Azure Functions | WebJobs |

| Temporal/Windowing | No | No |

| Data Format | Any | Any |

| Paradigm | Imperative | Imperative |

| Languages | C#, F#, Java, Node.js, Python | C#, Java, Node.js, PHP, Python |

| Pricing | Per execution | Per plan hour |

Note that for certain scenarios, as previously stated, Azure Functions and WebJobs are valid product choices for near real‐time data streaming solutions.